Imagine a world where robots react to their surroundings with the speed and intuition of a human. A world where autonomous vehicles instantly process complex visual data, and AI systems learn and adapt in real-time, mimicking the very way our brains do. This future is rapidly approaching, thanks to a groundbreaking innovation from researchers at RMIT University in Australia.

A Leap Towards Biological Computing

Scientists have developed a compact device that mimics the human brain’s ability to process information – a major step forward in the quest for truly intelligent and adaptable machines. This isn’t just incremental progress; it’s a fundamental shift in how we approach computing, moving towards what’s known as “biological computing.”

How It Works: Mimicking the Brain’s Neural Network

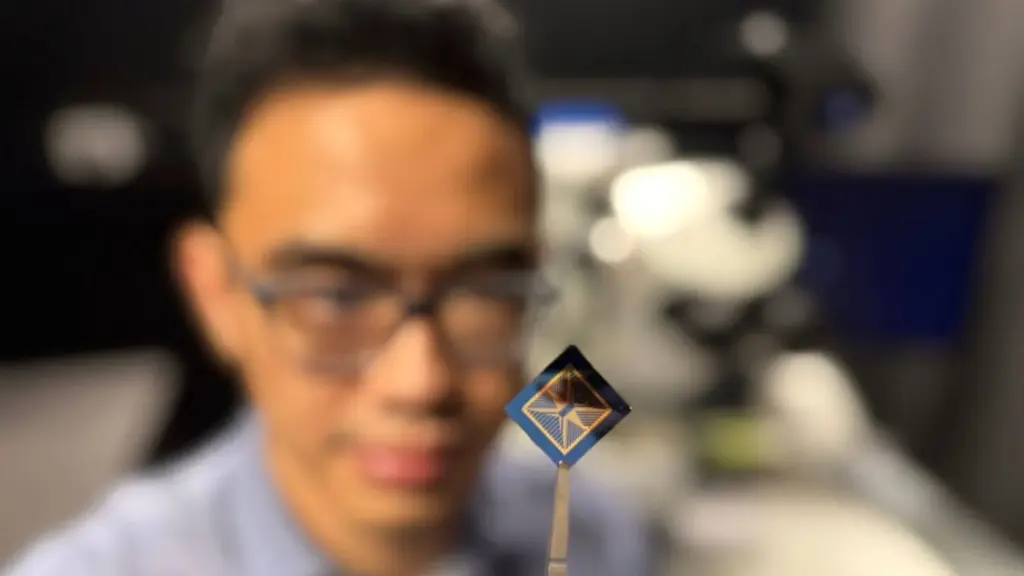

The device, built by a team at RMIT, can detect hand movements, store memories, and process visual data in real-time – all without relying on a traditional, external computer. The key lies in the device’s use of “spiking neural networks” (SNNs), which are modeled after real brain cells.

At the heart of the technology is molybdenum disulfide (MoS₂), a metal compound with atomic-scale defects. These defects allow the material to detect light and convert it into electrical signals, mirroring the way neurons in our brains respond to stimuli. The team utilized the “leaky integrate-and-fire (LIF) model,” a common approach in SNNs. This model simulates how neurons charge and discharge, sending out a signal (“spike”) when a certain threshold is reached, and then resetting.

Real-Time Vision Processing with MoS₂

The researchers built a spiking neural network using the key light-response features of MoS₂. The device achieved 75 percent accuracy on static image tasks after 15 training cycles and 80 percent accuracy on dynamic tasks after 60 cycles, demonstrating strong potential for real-time vision processing. It detected hand movements using edge detection, avoiding the need for frame-by-frame capture – a significant reduction in data and power consumption. Crucially, the device also stored these changes as memories, mirroring the way our brains function.

Beyond the Prototype: The Future of Smart Sensing

This work builds on earlier research in the ultraviolet spectrum, which focused on still image detection, memory, and processing. The team is now scaling the single-pixel prototype into a larger MoS₂-based pixel array, supported by new research funding. Plans include optimizing the device for more complex vision tasks, improving energy efficiency, and integrating it with conventional digital systems. They’re also exploring other materials to expand capabilities into the infrared range for applications like emission tracking and smart environmental sensing.

Implications for Robotics and AI

The potential impact of this technology is enormous. By detecting scene changes instantly with minimal data processing, the device enables faster, more efficient reactions – a critical advantage for autonomous vehicles and advanced robots operating in high-risk or fast-changing environments. Furthermore, this innovation could significantly enhance human-robot interaction in areas like manufacturing or personal assistance.

Sourced from https://interestingengineering.com

Additional Details: